Keywords AI

Debugging Large Language Models (LLMs) is tricky. Here's what you need to know:

- Fixing made-up info

- Improving prompts

- Speeding up performance

- Correcting context errors

- Reducing bias

- Enhancing security

- Managing growth

What is LLM Debugging?

LLM debugging finds and fixes errors in large language model apps. It's crucial for building AI that's accurate, fast, and fair.

Definition and Purpose

LLM debugging goes beyond regular code fixes. It's about making AI models give correct, useful answers. The main goals?

- Fix wrong info

- Speed up responses

- Remove unfair bias

Here's a real-world example: In March 2023, a big bank's AI chatbot gave bad financial advice. Their stock dropped 2%. Good debugging could've stopped this costly mistake.

Main Parts of LLM Debugging

LLM debugging has four key components:

- Error tracking: Find where and why the model messes up.

- Performance checking: Make sure it runs fast and smooth.

- Bias testing: Look for unfair treatment of different groups.

- Security testing: Guard against misuse or attacks.

7 Big LLM Debugging Problems and How to Fix Them

- Fixing Made-Up Information

LLMs can spit out wrong or nonsensical info. It's called "hallucinations". Why? The model's guessing game and its training data quality. How to tackle this:

- Check facts against trusted sources

- Use specialized models for niche tasks

- Try RAG to tap into verified databases

- Making Better Prompts

Bad prompts = useless responses. To improve:

- Refine step-by-step

- Track changes with tools

- Try chain-of-thought prompting

- Dealing with Slower Performance

LLMs can crawl. To speed up:

- Keep an eye on performance

- Use GPUs for inference

- Cache with libraries like functools

- Fixing Context Mistakes

LLMs can misread context. To fix:

- Clear up vague inputs

- Boost context retention

- Tweak text chunking

- Reducing Bias and Unfairness

Biased outputs? Not good. To fight it:

- Use diverse training data

- Add fairness rules in training

- Use bias detection tools

- Improving Security

LLMs face risks like prompt injection attacks. To beef up security:

- Encrypt sensitive data

- Use access controls

- Do regular security checks

- Managing Growth and Resources

Scaling LLMs? You need smart resource management:

- Try distributed computing

- Optimize model structures

- Use cloud platforms with auto-scaling

| Technique | What It Does | When to Use It |

|---|---|---|

| Prompt Engineering | Tweaks prompts for better answers | First optimization step |

| RAG | Adds external data for context | For context issues |

| Fine-tuning | Adapts the model for specific tasks | For niche domain work |

Tools for LLM Debugging

Debugging LLMs can be tricky. But don't worry - there are tools to help. Let's look at some top options:

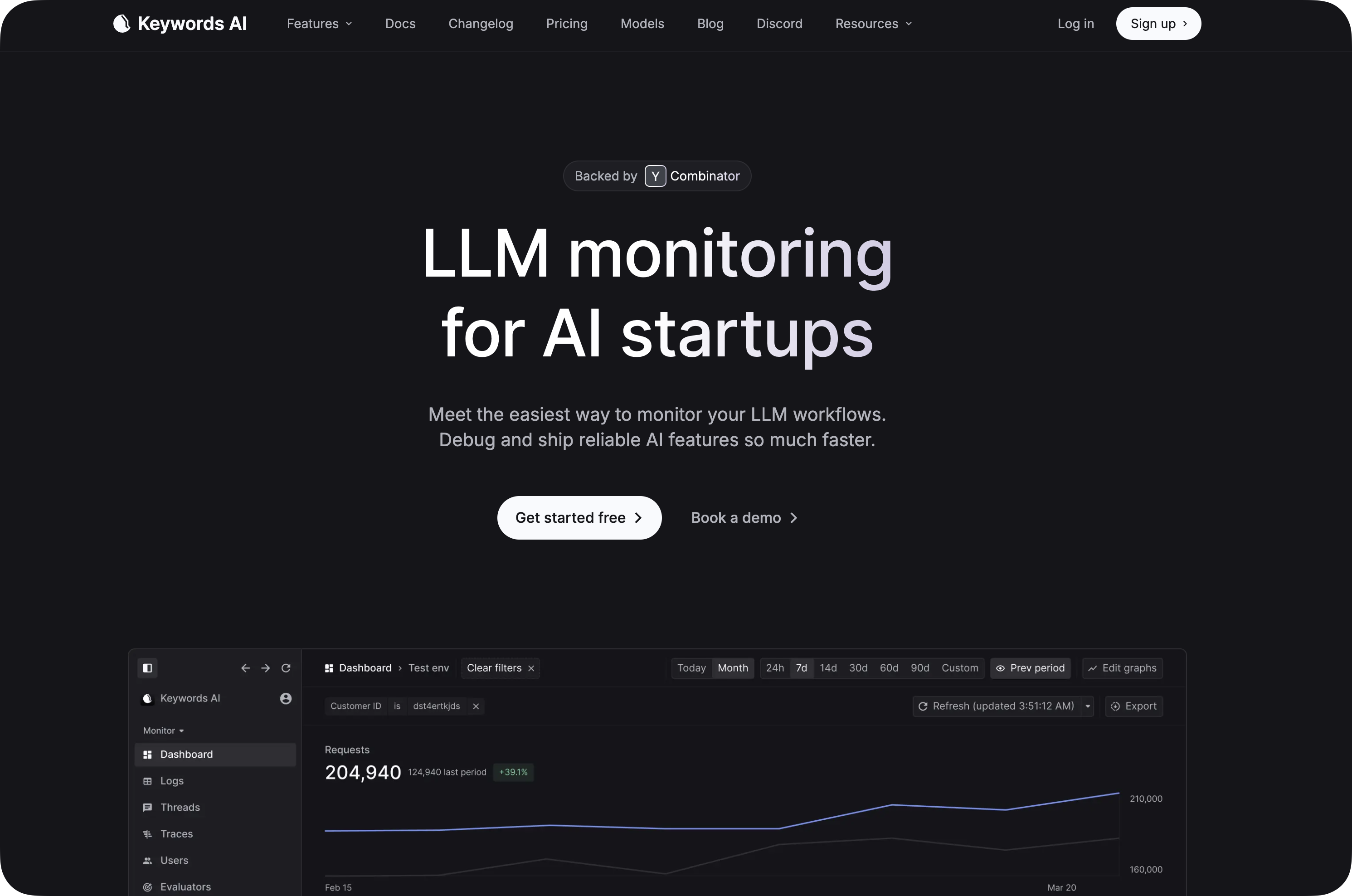

Keywords AI: Your All-in-One Solution

Keywords AI is like a Swiss Army knife for LLM debugging. Here's what it offers:

- One API for 200+ LLMs

- Detailed logs for every request

- Dashboard with 20+ metrics

- Model playground for testing

It's perfect if you want to simplify your LLM workflow.

Other Cool Tools

| Tool | What It Does | Best For |

|---|---|---|

| Helicone | Logs, tracks, caches | Saving money |

| Phoenix | Traces, evaluates, manages datasets | Comparing performance |

| OpenLLMetry | Monitors in real-time, tests quality | Checking output |

Tips for Better LLM Debugging

Debugging LLMs doesn't have to be a headache. Here's how to keep your AI applications running smoothly:

Use Verbose and Debug Modes

Want to peek under the hood? Try this:

- Turn on Verbose Mode for key event update

- Enable Debug Mode for a full event log

In Jupyter or Python, just use set_verbose(True) and set_debug(True) to get detailed logs.

Implement Tracing

For complex apps, tracing is your friend. LangSmith Tracing helps you log and visualize events, making it easier to spot issues.

Create a Knowledge Base

Build a go-to guide for common issues. Include:

- Detailed error descriptions

- Steps to reproduce issues

- Verified solutions and fixes

This saves time and helps your team learn from past challenges.

Isolate and Reproduce Bugs

Found a bug? Here's what to do:

- Isolate it with minimal code

- Strip away unnecessary components

- Focus on core functionality

- Make and test assumptions

- Document your hypotheses

- Test one variable at a time

- Repeat until fixed

- Keep iterating systematically

- Document successful fixes