Keywords AI

LLM logging with Vercel AI SDK

OpenAI dominates the AI market, with Anthropic and Gemini also gaining popularity. Developers need these models to build reliable AI products. Using OpenAI or Anthropic's basic SDKs can be challenging. Frameworks like LangChain and Vercel AI SDK simplify development and handle complex workflows.

Vercel's AI SDK provides a unified API for text generation, structured data, and tool calls with LLMs. It also offers a modern UI, streamlining app creation. However, it lacks observability tools for LLM apps, making performance monitoring and debugging difficult.

Once your app's basic functionalities are set up, monitoring and improving performance becomes crucial. Keywords AI fills this gap.

In this blog, we'll show how to implement LLM logging in production in just 5 minutes using a simple AI app.

Installing the AI SDK and Importing It into Your Codebase

Using Vercel's starter code, you can quickly create a local project. Begin by importing the Anthropic framework to leverage Anthropic models in your app.

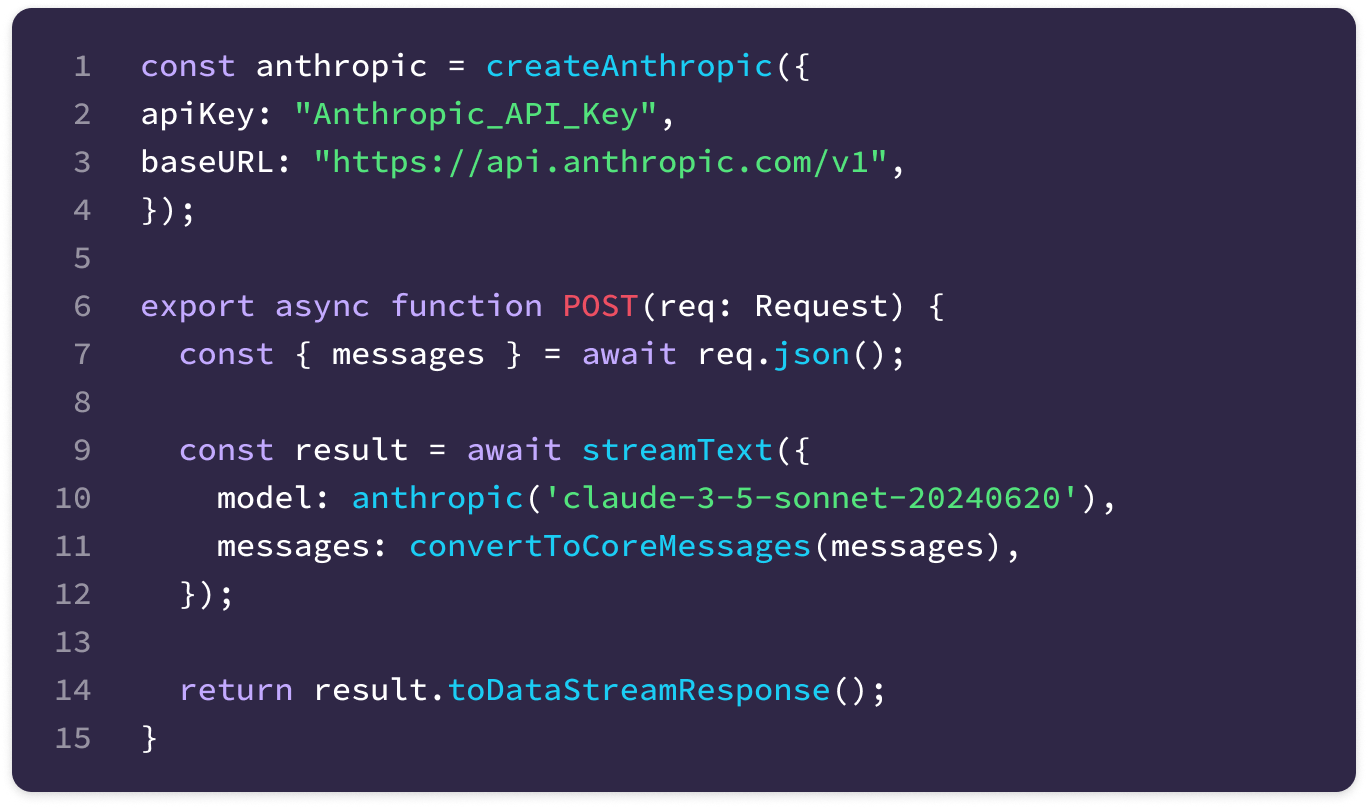

To make the app functional, implement two functions in your api/route.ts file:

- Import Anthropic Framework: Set up the Anthropic client by importing the necessary modules and configuring the API keys.

- Call Anthropic Models: Create a function that handles requests to the Anthropic API, enabling your app to generate AI responses.

With these functions added, run your app to view the frontend and assess its performance.

The app is working! What's next?

Track the performance and improve it

Keywords AI is an LLM monitoring and evaluation platform that enables you to monitor, debug, and enhance your AI app's performance. By integrating with the AI SDK, you can add monitoring capabilities with just a few lines of code changes.

Steps to Integrate Keywords AI:

Generate an API Key: Visit Keywords AI and obtain your API key.

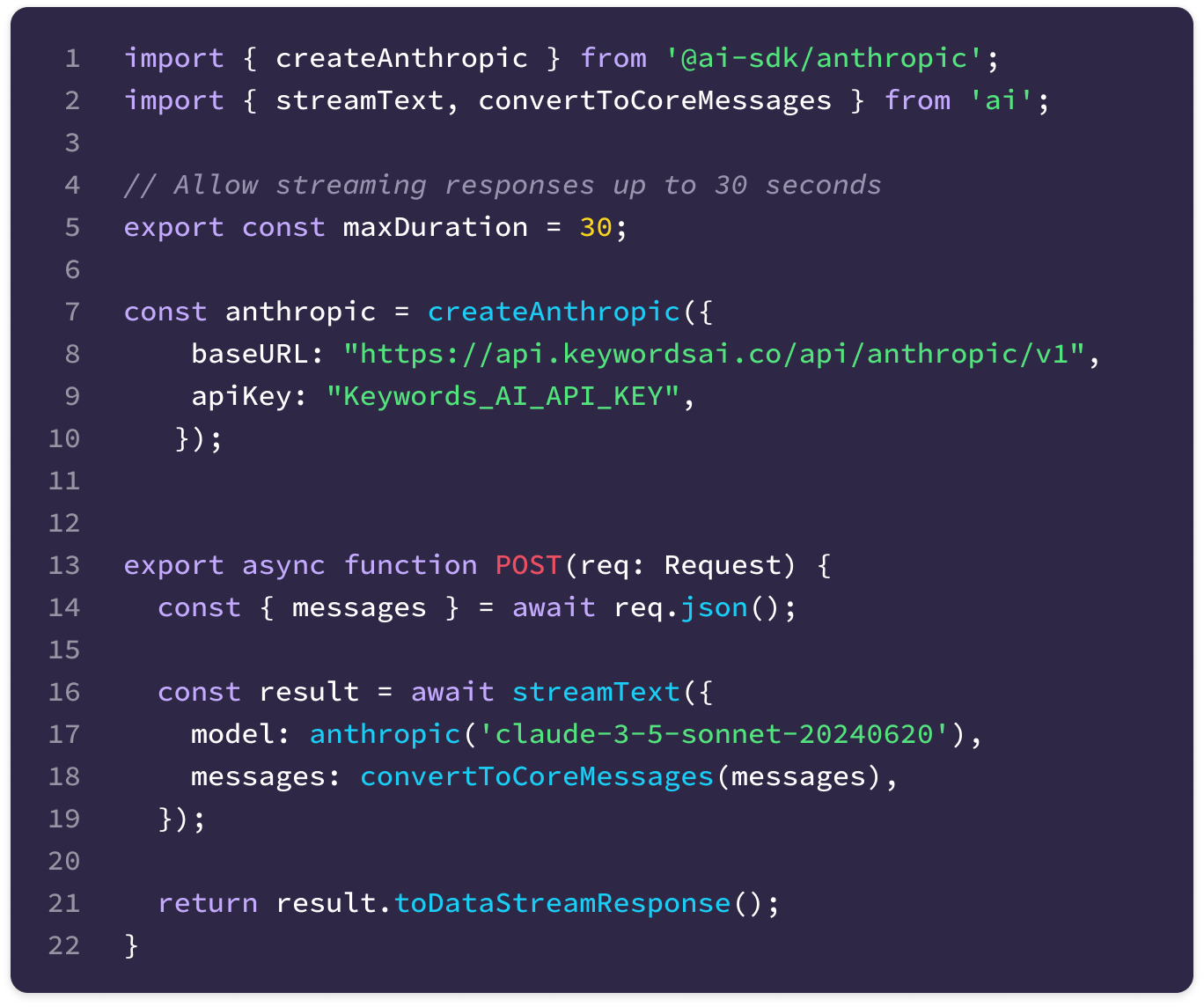

Update Your Code:

- Replace the

baseURLwithhttps://api.keywordsai.co/api/anthropic/v1. - Replace the

apiKeywith your Keywords AI API key.

Now, you can monitor your AI app directly through Keywords AI.

Full Code Snippet: