Keywords AI

What is a LPU and why it's faster than GPUs?

What is a Language Processing Unit (LPU)?

A Language Processing Unit (LPU) is a specialized chip developed by Groq, designed to handle the unique demands of Large Language Models (LLMs). Unlike traditional processors, LPUs focus on sequential processing, making them ideal for language-related tasks.

Try Groq models on Keywords AI

Keywords AI support all Groq models, including Llama 3.1 405B, Llama 3.1 70B, and Llama 3.1 8B. You can easily access these models through the Keywords AI platform, allowing you to test and deploy them in your applications. Check out the Keywords AI for more information on how to use Groq models.

How LPUs Work

LPUs are built to address two main bottlenecks in LLM processing:

- Compute density

- Memory bandwidth

The architecture of LPUs sets them apart from traditional processors:

- Single-core Architecture: Unlike multi-core processors, LPUs use a single-core design. This simplifies processing and reduces coordination overhead.

- Synchronous Networking: Even in large-scale deployments, LPUs maintain synchronous communication, ensuring efficient data flow.

- Sequential Processing: LPUs excel at sequential tasks, which is ideal for language processing where context and order are crucial.

- Optimized Memory Access: LPUs feature near-instant memory access, reducing latency in data retrieval and processing.

Why LPUs Are Faster

Several factors contribute to the superior speed of LPUs:

- Specialized Design: LPUs are purpose-built for language tasks, eliminating unnecessary components found in general-purpose processors.

- Reduced Bottlenecks: By addressing compute density and memory bandwidth issues, LPUs remove major performance bottlenecks.

- Efficient Data Handling: The synchronous, sequential nature of LPUs allows for more efficient handling of language data.

- High Accuracy at Lower Precision: LPUs maintain high accuracy even when operating at lower precision levels, allowing for faster processing without significant quality loss.

- Auto-compilation: LPUs can auto-compile LLMs with over 50 billion parameters, streamlining the deployment of large models.

The Benefits of LPUs

Speed

Recent tests have shown impressive results:

- Llama-3 70B ran at over 250 tokens per second

- Mixtral achieved nearly 500 tokens per second per user

For context, this means a user could potentially generate a 4,000-word essay in just over a minute.

Efficiency

LPUs offer:

- Higher processing speeds

- Improved throughput

- Better precision

These benefits make LPUs particularly valuable for industries like finance, government, and technology, where rapid and accurate data processing is crucial.

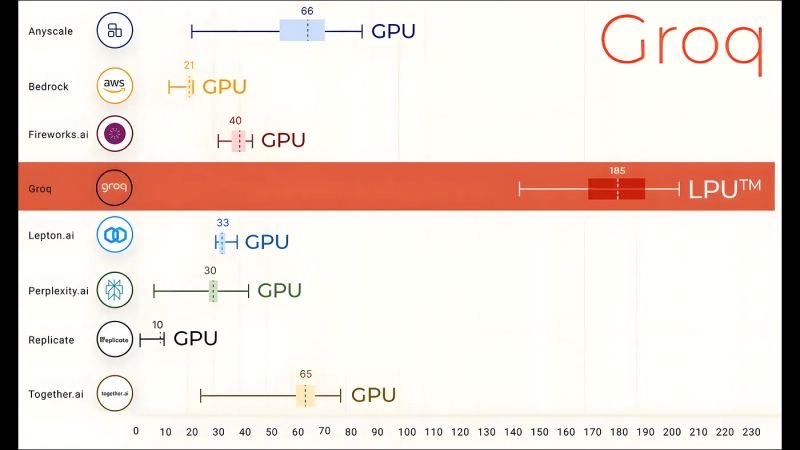

LPUs vs. GPUs

While LPUs excel at inference tasks, GPUs still lead in model training. Both have their strengths:

- LPUs: Optimal for applying trained models to new data

- GPUs: Best for the initial training of AI models

The future may see LPUs and GPUs working together, each focusing on their strengths.

The Origins of LPU

Jonathan Ross, who started the Tensor Processing Unit (TPU) project at Google, founded Groq in 2016. The company's innovative approach involved developing software and compilers before designing the hardware, resulting in a highly optimized system.

The Software-First Approach

Groq's innovative approach to developing LPUs involved:

- Focusing on software and compiler development before hardware design.

- Ensuring optimal communication between chips.

- Creating a system where software guides inter-chip communication.

This software-first strategy resulted in a highly optimized system that outperforms traditional setups in speed, cost efficiency, and energy consumption.

Conclusion

Language Processing Units represent a significant advancement in AI hardware. By combining architectural innovations with a software-optimized approach, LPUs achieve remarkable speeds in language processing tasks. As we enter a new era of LLMs, technologies like LPUs will play a crucial role in pushing the boundaries of what's possible in natural language processing and generation.