Keywords AI

5 best LLM playgrounds in 2024

Exploring the capabilities of LLMs has become more accessible and exciting in 2024, thanks to various innovative playgrounds. Whether you're a developer, researcher, or AI enthusiast, having the right platform can significantly enhance your work with LLMs.

In this blog, we'll introduce you to the 5 best LLM playgrounds of 2024. From versatile platforms supporting a wide range of models to specialized tools with unique features, these playgrounds offer diverse options to suit different needs and preferences. Dive in to discover the top tools that can help you unlock new possibilities in the world of AI.

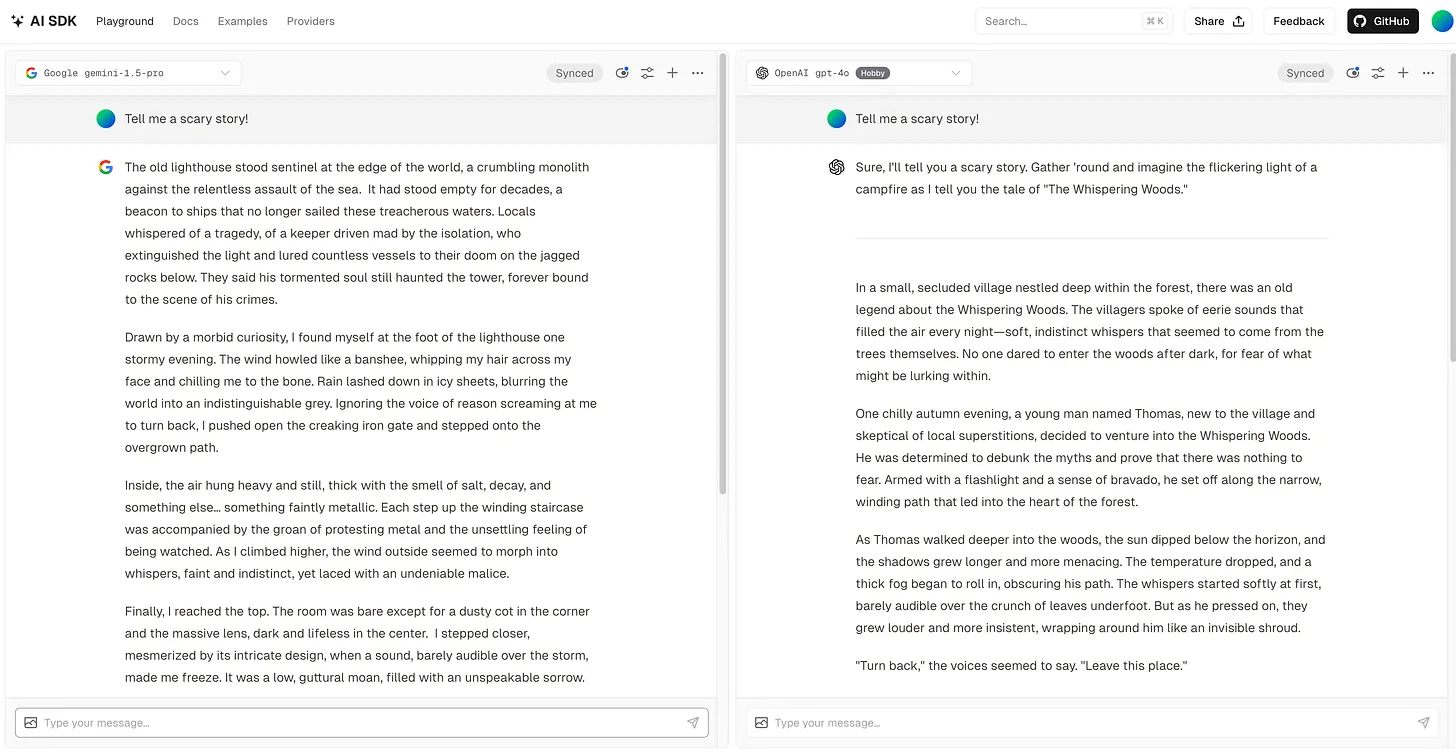

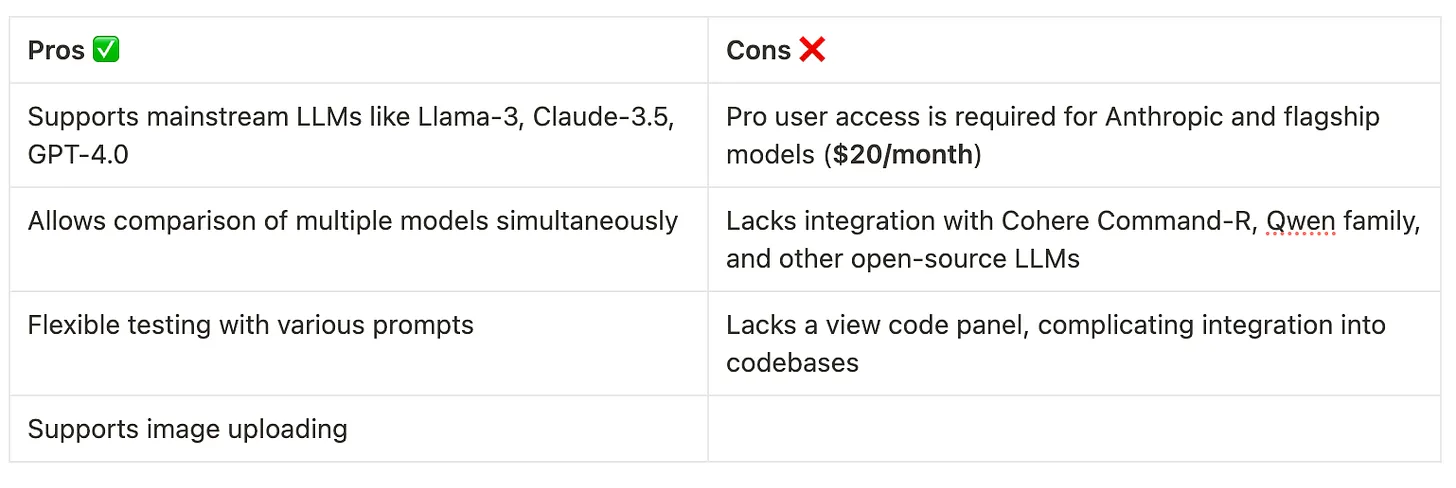

Vercel AI ⭐️⭐️⭐️⭐️⭐️

Vercel AI offers an impressive playground for those looking to experiment with mainstream LLMs such as Llama-3, Claude-3.5, and GPT-4.0. One of its standout features is the ability to compare the performance of different models using the same prompt or test various prompts across multiple models simultaneously. This flexibility is particularly valuable for users who must test and evaluate multiple models in a single session.

However, access to Anthropic and other flagship models is restricted to pro users, which requires a subscription of $20/month. While Vercel Playground supports image uploading, it lacks a view code panel, making it less friendly for users who wish to integrate models into their codebase. Additionally, it does not integrate with other popular LLMs, such as Cohere Command-R and the Qwen family, limiting its versatility for users who rely on these models.

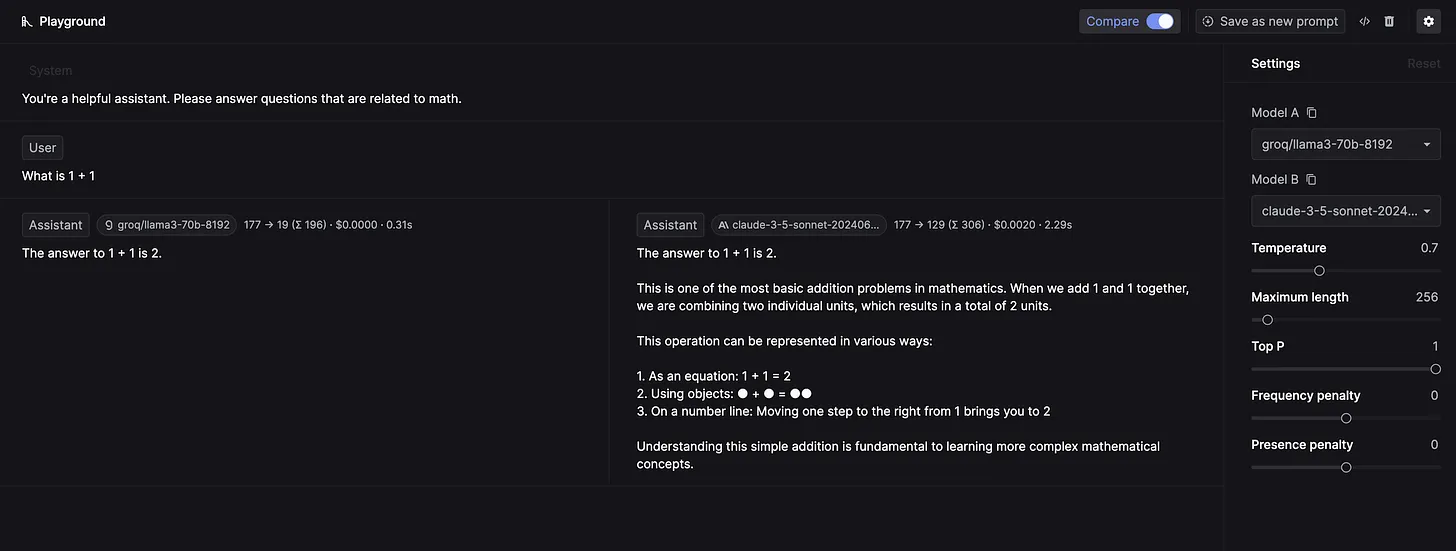

Keywords AI ⭐️⭐️⭐️⭐️⭐️

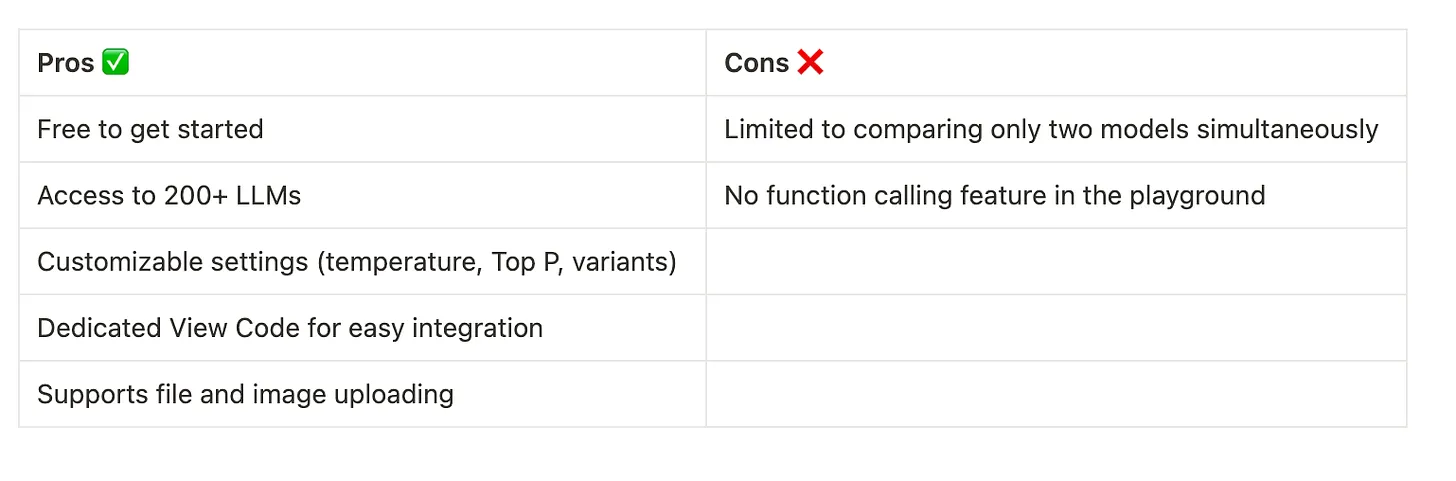

Keywords AI is a unified DevOps platform where developers can access over 200 LLMs and monitor their performance in production. The Keywords AI LLM playground allows users to choose any two of these models to compare their performance. Users can also customize settings such as temperature, Top P, and variants, enabling models to generate multiple responses based on a single prompt.

The platform provides a dedicated View Code feature for seamless integration in OpenAI, Anthropic, or Langchain formats. It also supports file and image uploading, making it versatile for various testing scenarios.

Despite these strengths, the playground does not support comparing more than two models simultaneously, and users cannot add function calling within the playground.

Fireworks AI ⭐️⭐️⭐️⭐️

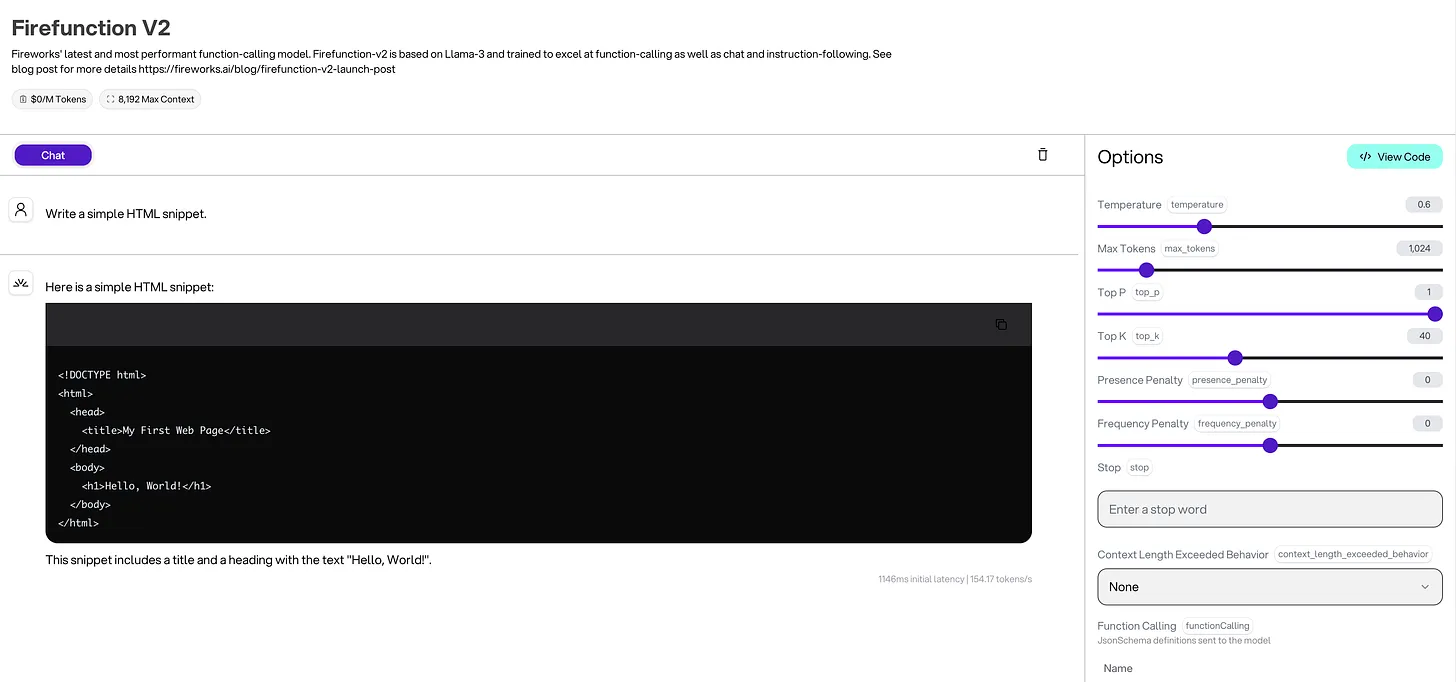

Fireworks AI playground supports various open-source LLMs that they host. Users can test these models and easily integrate them into their projects by copying the integration code from the View Code panel. Additionally, users can customize settings such as temperature, stop words, and function calling, making it a versatile tool for fine-tuning model behavior.

However, despite its usage-based pricing being affordable for common use cases, users must add a credit card to get started. The playground does not support image uploading, and users can only experiment with models hosted by Fireworks, meaning they can't try models from OpenAI, Anthropic, and Google. Furthermore, it only allows users to test a single model at a time, which is inconvenient when comparing models' performance.

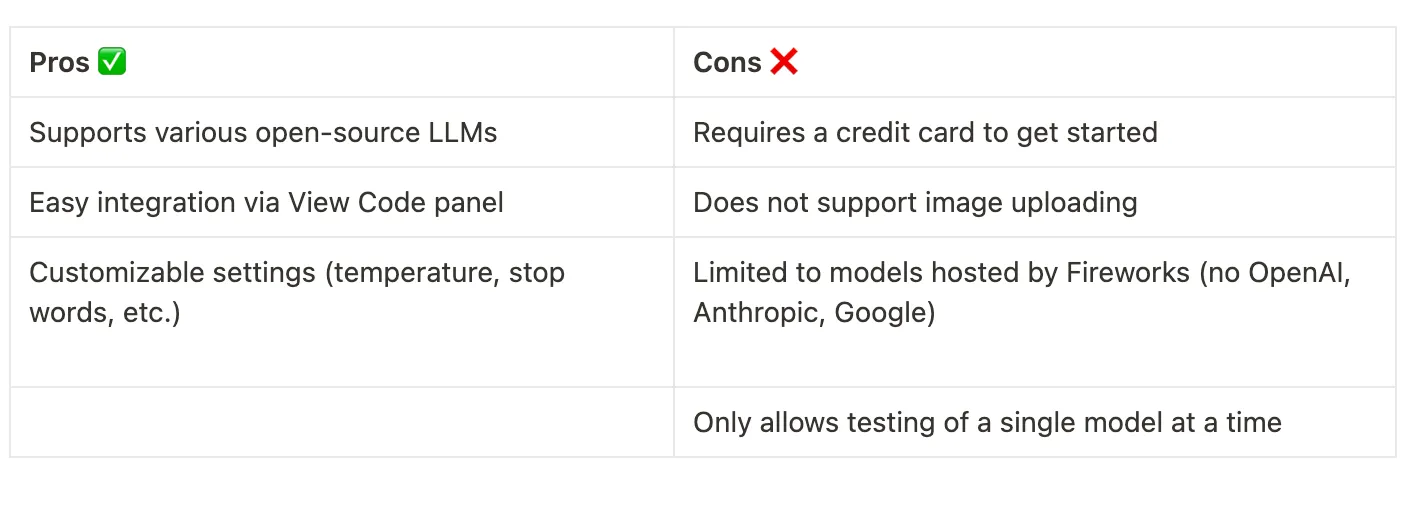

Together AI ⭐️⭐️⭐️⭐️

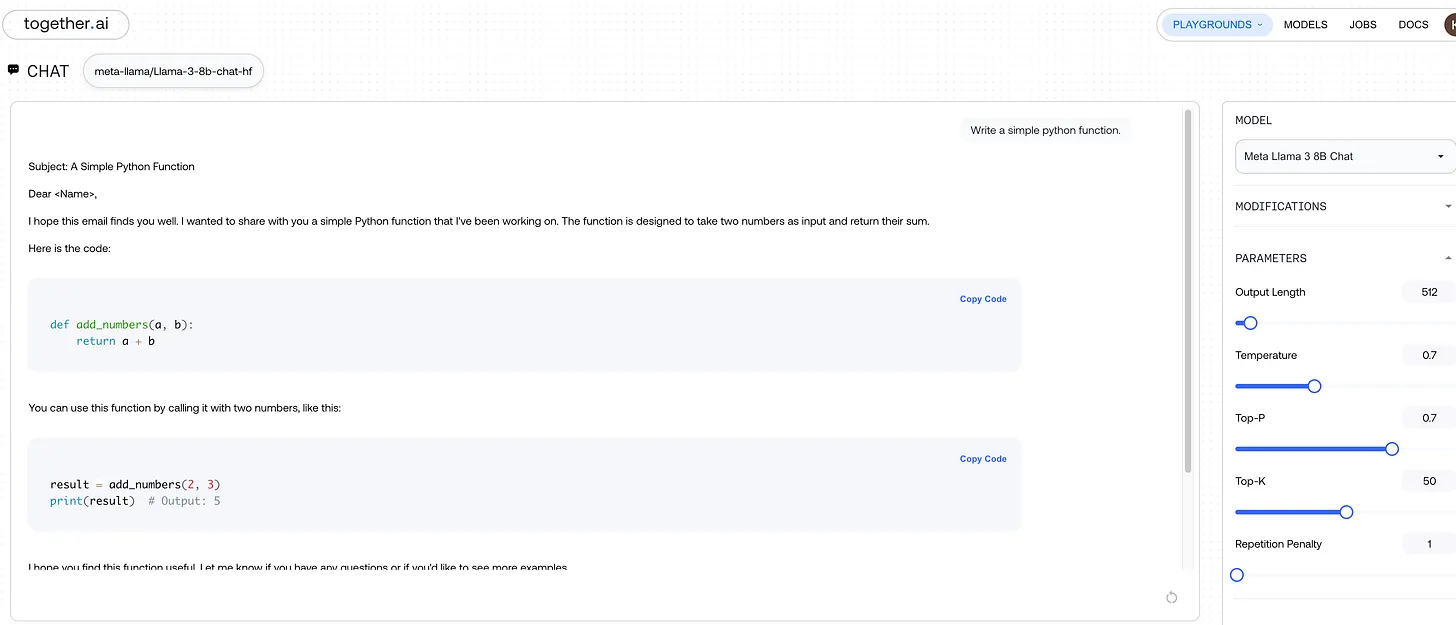

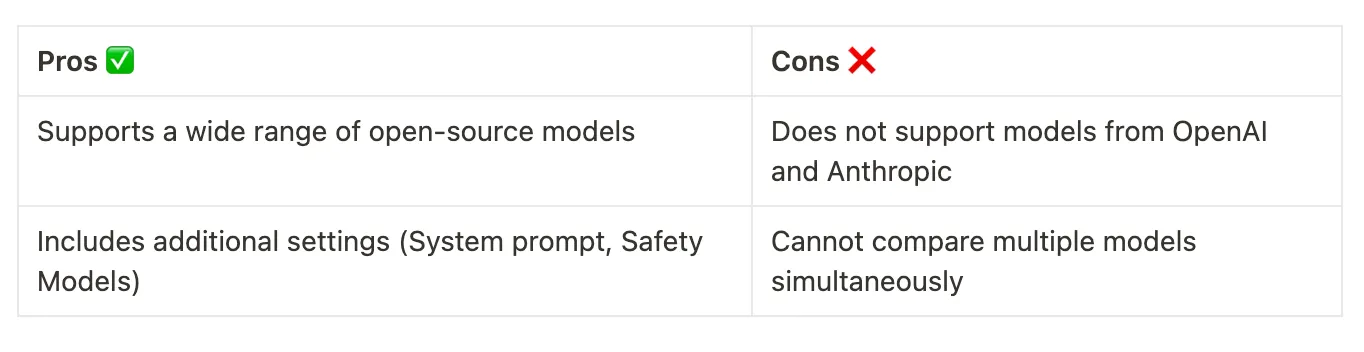

The Together AI playground is quite similar to Fireworks AI but offers more extensive support for open-source models. It includes additional settings such as System prompt and Safety Models, giving users more control over model behavior and safety parameters.

Despite these advantages, it does not support models from providers like OpenAI and Anthropic. Additionally, users cannot compare multiple models simultaneously, limiting its functionality for side-by-side performance evaluation.

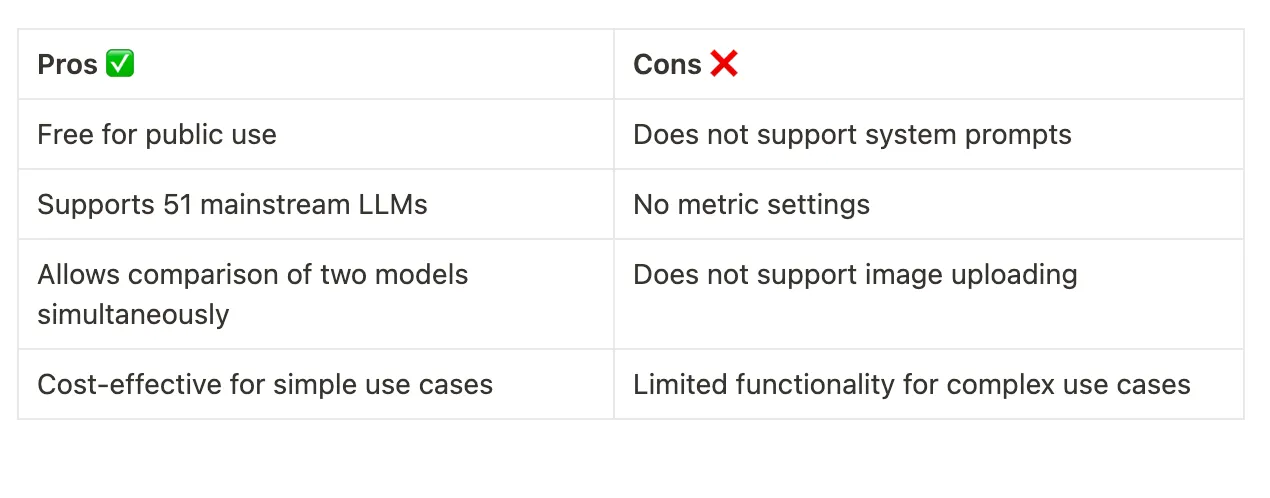

LMSYS Chatbot Arena ⭐️⭐️⭐️⭐️

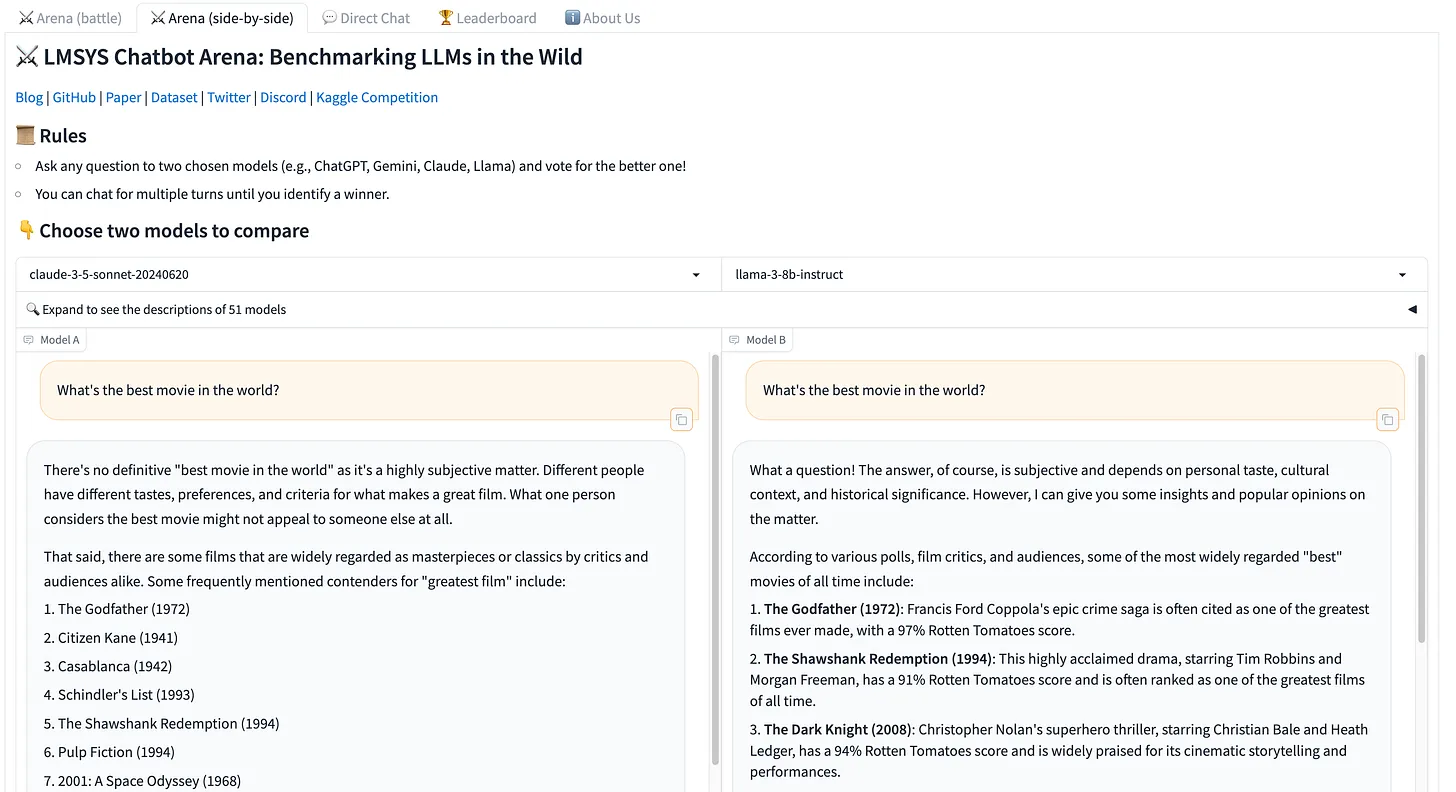

The LMSYS Chatbot Arena playground is completely free for public use and supports 51 mainstream LLMs. It allows users to compare the performance of two models simultaneously, making it a highly cost-effective option for testing models in simple use cases.

However, this playground is limited in its capabilities and does not support system prompts, metric settings, or image uploading, which restricts its usability for more complex scenarios.